Kafka With Spring - A Rapid dev guide

Overview

Apache Kafka is an event streaming platform that is used to collect, process, store, integrate data at scale.

Kafka is a distributed system consisting of servers and clients that communicate via a high-performance TCP network protocol.

In this tutorial, we will cover Spring support for Kafka. Spring ecosystem brings Spring Kafka which is a very simple and typical template programming model with a KafkaTemplate message driven POJO via @KafkaListener annotation.

Complete source code for this article can be found on GitHub.

Wait, boss !!! What is an event streaming platform ?

What are Events?

Boss !!! In our daily life, we are coming with lots of small or big events too. So guess what is it.

An event is any type of action, incident, or change that is identified or recorded by software applications. e.g a website click, a payment or a temperature reading, etc.

What is Event Streaming?

It is the digital equivalent of the human body's central nervous system. It is the practice of capturing the real-time events from sources like databases, sensors, mobile devices in the form of a stream of events; storing these event streams for later retrieval; manipulating, processing, and reacting to the event stream in real-time.

Event streaming thus ensures a continuous flow and interpretation of data so that the right information is at the right place at the right time.

Where Can we use Event Streaming?

Hey Boss!!! We can use event streaming in a large areas . Some of the areas such as :

- Real-time processing of payments, financial transactions such as stock exchanges, insurances, banks etc.

- Tracking and monitoring truck, cars,shipments etc. in automotive & logistics industry.

- Capturing and analyzing sensitive data from IoT devices.

- Monitoring patients in hospital care units and ensure timely treatment in emergencies.

How kafka is an Event Streaming Platform

Kafka combines three key capabilities which you can implement for your event-streaming ene-to-end.

- To publish(write) and subscribe to(read) stream of events, including contineous import/export of your data from other system.

- To store streams of events durably and reliably as long as you want.

- To process streams of events as they occur or retrospectively.

and all this functionality is provided in a distributed, highly scalable,elastic,fault-tolerent and secure manner.

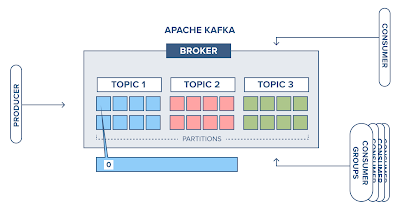

Kafka Topic

A topic is a log of events. Kafka's most fundamental unit of organization is the topic, which is like a table in relational datadase. You can create different topics to hold different kinds of events and different topics to hold filtered and transformed versions of the same kind of data.

$ bin/kafka-topics.sh --create --topic quickstart-events --bootstrap-server localhost:9092Kafka Partitioning

Partitioning takes the single topic log and breaks it into multiple logs, each of which can live on a separate node in the Kafka cluster. This way, the work of storing messages, writing new messages, and processing existing messages can be split among many nodes in the cluster.

Kafka Brokers

From a physical infrastructure standpoint, Kafka is composed of a network of machines called brokers. Each broker hosts some set of partitions and handles incoming requests to write new events to those partitions or read events from them. Brokers also handle replication of partitions between each other.

Kafka Producers

The API surface of the producer library is fairly lightweight: In Java, there is a class called KafkaProducer that you use to connect to the cluster.

There is another class called ProducerRecord that you use to hold the key-value pair you want to send to the cluster.

Kafka Consumers

Using the consumer API is similar in principle to the producer. You use a class called KafkaConsumer to connect to the cluster (passing a configuration map to specify the address of the cluster, security, and other parameters).Then you use that connection to subscribe to one or more topics. When messages are available on those topics, they come back in a collection called ConsumerRecords, which contains individual instances of messages in the form of ConsumerRecord objects. A ConsumerRecord object represents the key/value pair of a single Kafka message.

Conclusion

In this article we have covered the basic of Kafka . Keep follow for the indepth tutorial and kafka commands in next tutorials.

Complete source code for this article can be found on GitHub.

No comments:

Post a Comment